Table of Contents

Key Takeaways

- Meta uses AI to identify underage accounts, even if users falsify birthdates, and enforces Teen Account safeguards automatically.

- Teens under 16 now require parental approval for Instagram live streaming and disabling nudity filters in direct messages.

- Teen Accounts expand to Facebook and Messenger, applying private profiles, content restrictions, and screen-time reminders by default.

- Over 97% of younger teens retain protective settings, though critics question enforcement consistency across global user bases.

- Updates align with regulatory pressures like the UK’s Online Safety Act, signaling Meta’s proactive stance on child safety.

AI-Powered Age Detection and Enforcement

- Meta’s AI analyzes behavioral signals—such as birthday messages (e.g., “Happy 15th!”), interaction patterns, and content preferences—to flag potential underage users.

- Accounts suspected of belonging to teens are automatically placed into restricted Teen Accounts, which include:

- Private profiles by default

- Blocks on messages from strangers

- Sensitive content filters for posts and reels

- Users can appeal misclassifications by submitting government ID or a video selfie via third-party partner Yoti.

Parental Permission Requirements

- Live streaming: Teens under 16 must obtain parental consent to broadcast live on Instagram, addressing concerns about real-time stranger interactions.

- Nudity protection: Direct messages containing suspected explicit images are blurred by default. Teens cannot disable this feature without guardian approval.

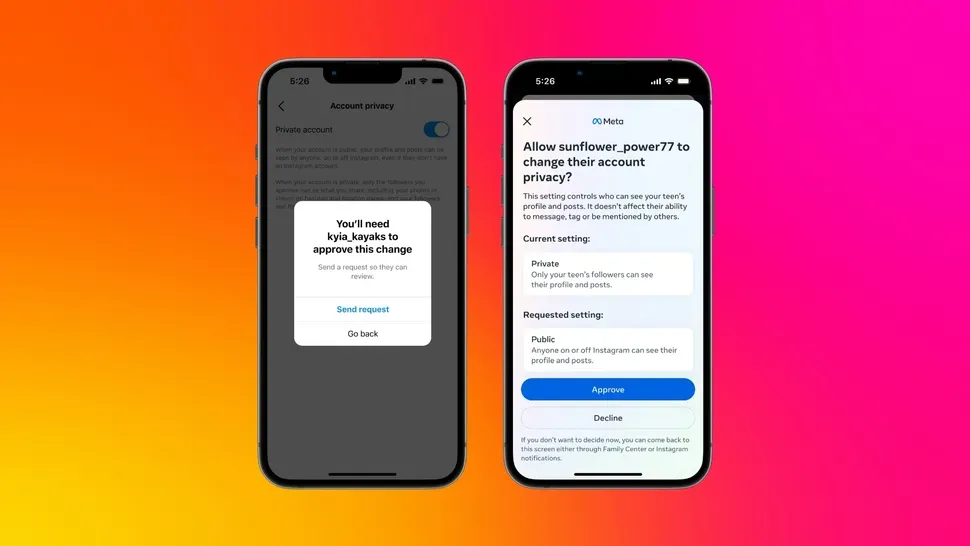

- Account visibility: Switching from private to public profiles now triggers a parental permission prompt for users under 16.

Cross-Platform Expansion of Teen Accounts

- Initially exclusive to Instagram, Teen Accounts now roll out to Facebook and Messenger in the U.S., UK, Australia, and Canada, with global expansion planned.

- Key platform-specific safeguards include:

- Facebook: Restricted visibility in search results and reduced content recommendation reach.

- Messenger: Stranger messaging blocks and “Take a Break” reminders after 60 minutes of use.

- Meta reports 54 million teens enrolled in protective settings globally as of April 2025.

Content and Messaging Safeguards

- Algorithmic adjustments: Teen feeds prioritize educational and positive content while deprioritizing viral challenges or extreme diet trends.

- Overnight notifications: App usage between 10 p.m. and 6 a.m. triggers automatic “Wind Down” mode, pausing non-essential alerts.

- Screen-time dashboards: Teens receive weekly reports detailing app usage patterns and comparative data with peers.

Implications for Parents and Regulators

- Parental oversight tools: Meta’s Family Center portal now allows guardians to:

- Review teen account settings

- Set daily time limits

- Monitor follower/following lists

- Regulatory alignment: Updates address mandates like the UK Online Safety Act, which requires platforms to minimize minors’ exposure to harmful content.

- Industry precedent: Meta advocates for app-store-level age verification, urging legislation requiring parental consent for under-16 social media access.

Meta’s layered approach combines AI enforcement with cross-platform consistency to create a safer environment for teens, though effectiveness hinges on transparent execution. For businesses, these changes highlight the growing operational cost of balancing user safety with engagement metrics in regulated markets.