Table of Contents

When AI systems learn from historical data, they inherit more than information—they inherit the tragedies, frauds, and errors of the past, creating digital ghosts that continue shaping our decisions long after their human origins have died.

AI training data contains hidden remnants of past disasters that continue influencing modern decisions through machine learning systems.

Key Takeaways

- AI systems trained on historical data unknowingly perpetuate the influence of past tragedies and criminal activities

- Bloomberg GPT was partially trained on Enron emails, meaning financial fraud patterns now influence AI financial advice

- The famous Reinhart-Rogoff economics paper contained a simple Excel error that justified global austerity measures for years

- HeLa cells from Henrietta Lacks continue advancing medical research decades after her death, representing how biological "data" persists

- Most AI companies don't disclose their training data sources, making it impossible to identify these digital ghosts

- Data replication in AI systems works like cancer cells—multiplying endlessly without dying out

- Understanding AI training data sources is as important as knowing food ingredients for public safety

- These digital ghosts scale much faster through AI than through traditional human decision-making processes

The Immortal Legacy of Henrietta Lacks

Henrietta Lacks died of cancer in 1951, but her cells live on as one of medicine's most important research tools. Born in 1920 in a Virginia log cabin, Lacks passed away at just 31 years old, leaving behind five children and an unmarked grave.

- Her cancer cells, dubbed HeLa cells, possess an unusual genetic structure with 35-40 chromosome pairs instead of the normal 23, making them effectively immortal

- These cells continue replicating endlessly in laboratories worldwide, contributing to AIDS research, polio vaccines, and countless biomedical breakthroughs

- Unlike normal human cells that die after a certain number of divisions, HeLa cells replicate indefinitely—behaving exactly like digital ghosts

- The cells represent how biological data persists and shapes medical decisions long after the original person has died

- Medical researchers worldwide unknowingly work with genetic material from a woman whose burial site remains unknown

This biological persistence mirrors how digital information replicates through AI systems. Just as Lacks' cells continue influencing medical research, historical data embedded in AI training sets continues shaping algorithmic decisions across industries.

The $185 Billion Spreadsheet Error That Broke Economies

Harvard economists Carmen Reinhart and Kenneth Rogoff published a seemingly authoritative paper in 2010 titled "Growth in a Time of Debt." Their conclusion appeared straightforward: when public debt exceeds 90% of GDP, economic growth collapses.

- Political leaders including Paul Ryan and David Cameron used this research to justify sweeping austerity measures across healthcare, education, and social services

- The paper's influence stemmed partly from Harvard's prestigious reputation—journals didn't even require peer review for such esteemed economists

- Graduate student Thomas Herndon later gained access to the actual spreadsheets and discovered a fundamental error in the calculations

- Reinhart and Rogoff had simply excluded the last five rows of data, omitting countries like Denmark, Canada, Belgium, Austria, and Australia from their analysis

- This basic Excel mistake provided the academic justification for austerity policies that affected millions of lives globally

The error demonstrates how seemingly authoritative data can replicate through policy decisions like a virus. Once established, the flawed conclusions continued influencing economic policy long after publication, creating what Slavin calls "erratic Canadian ghosts" haunting domestic policy worldwide.

- Economic data, like cancer cells, replicates and expands indefinitely once released into academic and policy circles

- The paper's influence persisted even after the error was discovered, showing how institutional momentum preserves flawed conclusions

- This case illustrates why transparency in data methodology becomes crucial for preventing systemic policy errors

Bloomberg GPT: When AI Learns from Financial Criminals

Bloomberg made headlines by openly publishing details about their proprietary GPT model for finance, including their training data sources. This transparency revealed something disturbing: the AI learned communication patterns partly from the Enron email corpus.

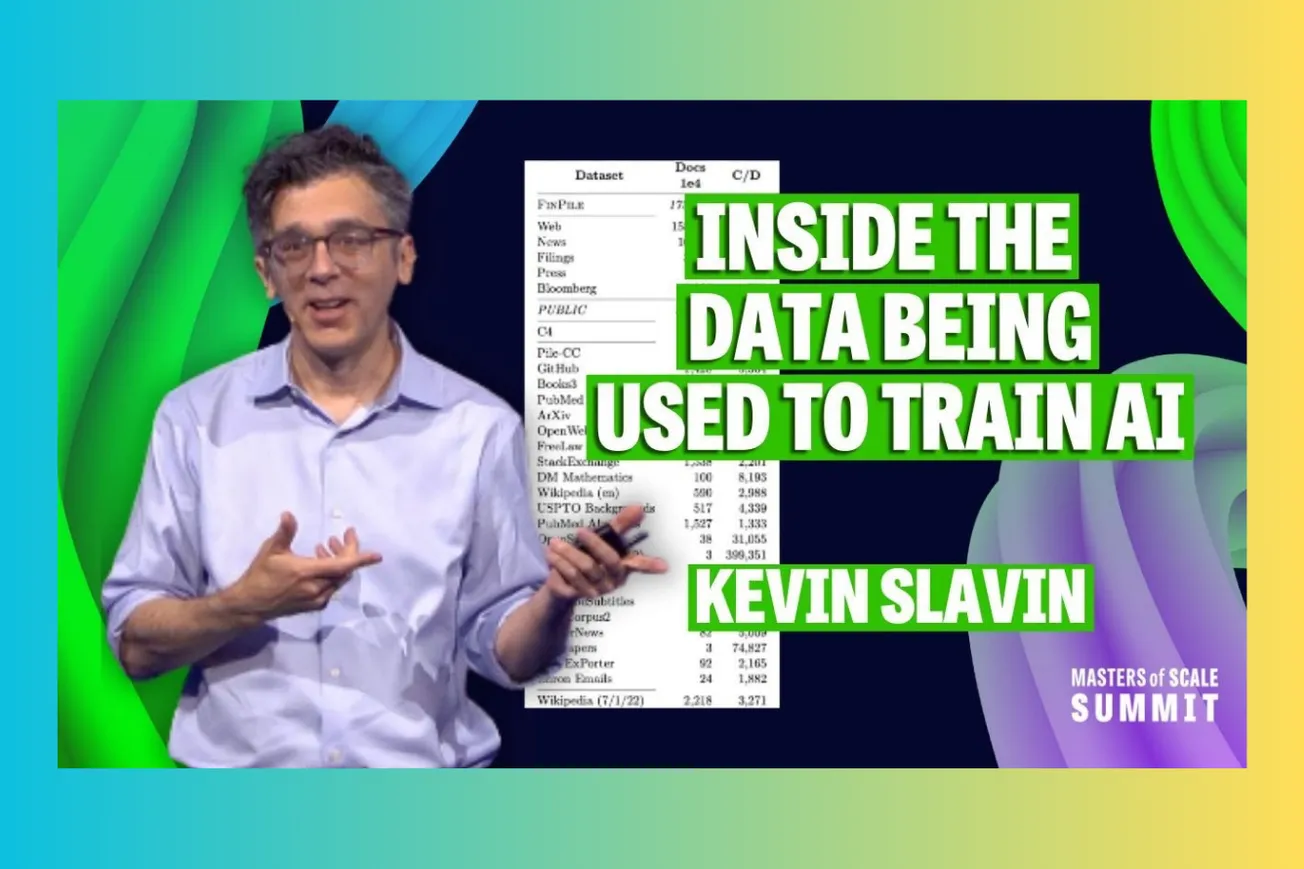

- Bloomberg GPT was trained on a combination of public datasets including YouTube subtitles, GitHub code repositories, and Wikipedia content

- Hidden among these sources were 500,000 emails from Enron Corporation, the energy company that collapsed in 2001 after perpetrating massive financial fraud.

- Enron represented the seventh-largest company in the United States before its $185 billion collapse left California without electricity and pension funds without money

- The company's email communications reveal the actual structure of financial fraud—showing how criminals coordinate through digital channels

The Enron emails became public domain in 2003 when prosecutors used them as evidence in criminal proceedings. Researchers immediately recognized their value as training data for artificial intelligence systems.

- Network analysis of Enron emails reveals fraud has a distinctive communication pattern—resembling a COVID spike protein rather than normal corporate interaction

- Healthy companies show distributed communication networks, while fraudulent organizations demonstrate isolated clusters of conspirators

- These communication patterns, embedded in AI training data, potentially influence how modern financial AI systems interpret and generate business communications

- Bloomberg's AI may unknowingly perpetuate linguistic patterns and decision-making frameworks derived from criminal conspiracy

The implications extend beyond Bloomberg. Most AI companies refuse to disclose their training data sources, making it impossible to identify what other digital ghosts influence their systems.

The Scaling Problem: How Digital Ghosts Multiply

Kevin Slavin argues that AI amplifies the influence of historical tragedies far beyond what traditional human systems could achieve. While past errors might have influenced policy through academic papers or institutional memory, AI systems process and apply historical patterns at unprecedented scale.

- AI systems replicate training data patterns billions of times faster than human decision-makers could spread flawed conclusions

- Unlike human institutions that might eventually recognize and correct errors, AI systems can perpetuate historical biases indefinitely

- Each AI interaction potentially reinforces patterns learned from problematic training data, creating compound effects over time

- The speed of AI deployment means digital ghosts can influence millions of decisions before anyone identifies their presence

Modern society demands transparency about food ingredients because of Upton Sinclair's 1906 exposé about unsafe meat processing. Slavin suggests we need similar transparency about AI training data ingredients.

- Most people know more about their breakfast cereal contents than about the data sources influencing AI systems that affect their healthcare, finance, and employment decisions

- AI companies generally treat training data as proprietary information, preventing public oversight of potential problems

- Without transparency requirements, society cannot identify or address the influence of digital ghosts in AI systems

- The scale and speed of AI deployment make data transparency even more critical than food safety regulation

Common Questions

Q: What makes Bloomberg GPT different from other AI models?

A: Bloomberg publicly disclosed their training data sources, revealing they used Enron emails alongside other datasets.

Q: How do digital ghosts actually affect AI behavior?

A: AI systems learn communication patterns and decision-making frameworks from training data, potentially replicating historical biases and errors.

Q: Why should we care about old data in AI systems?

A: Historical data shapes how AI systems interpret information and make recommendations that affect real-world decisions.

Q: Can these digital ghosts be removed from AI systems?

A: Once embedded in training data, removing specific influences requires retraining models, which is expensive and technically challenging.

Q: How can we identify digital ghosts in AI systems?

A: Companies must provide transparency about training data sources, similar to food ingredient labels.

AI systems inherit the tragedies and errors of the past through their training data, creating digital ghosts that continue shaping our future decisions. Without transparency about these data sources, we cannot identify or address their ongoing influence on the systems that increasingly govern our lives.