Table of Contents

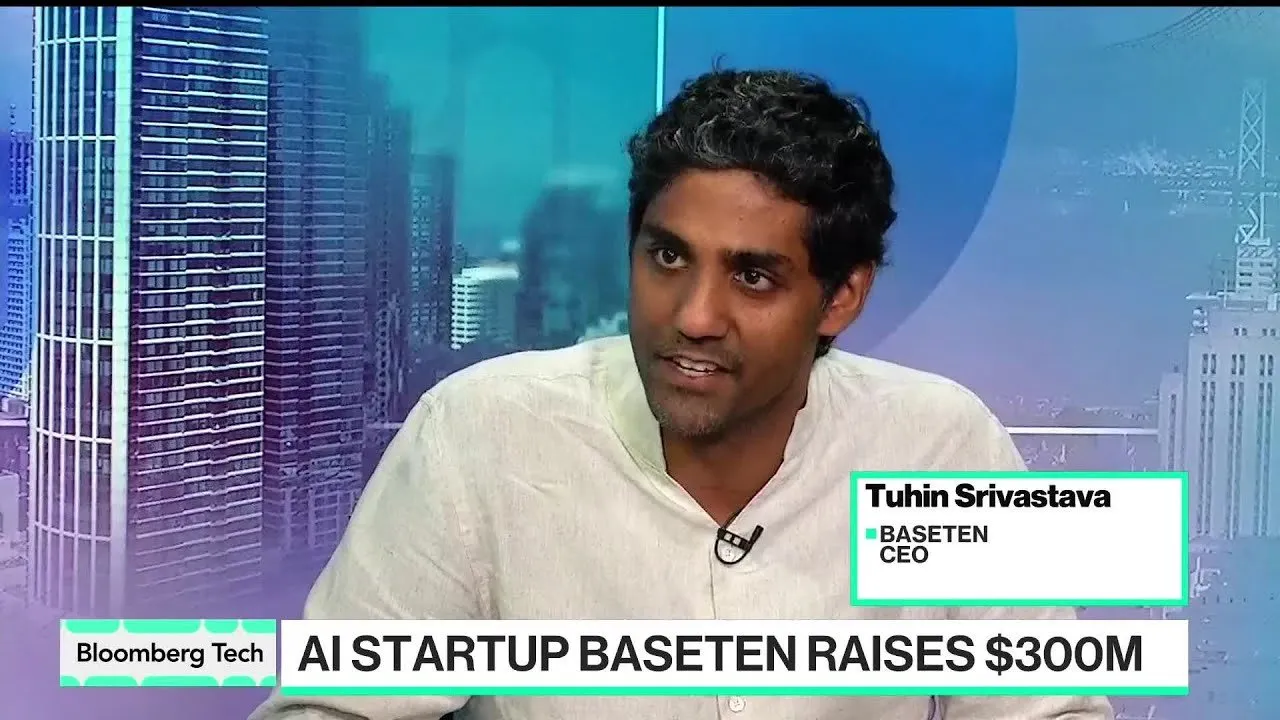

AI infrastructure startup Baseten has secured $300 million in a new funding round that doubles its valuation to $5 billion in just six months, signaling intense investor appetite for the "inference" layer of the artificial intelligence stack. Backed by venture firm Conviction and chip giant Nvidia, the San Francisco-based company plans to use the capital to scale its compute capacity and acquire high-cost engineering talent to support the next generation of AI-native applications.

Key Points

- Valuation Surge: Baseten raised $300 million, jumping to a $5 billion valuation less than a year after its previous financing.

- Strategic Backers: The round includes participation from Nvidia and Sarah Guo’s Conviction, emphasizing a "secular bet" on AI infrastructure.

- Focus on Inference: The company targets the inference market—running models after they are trained—which founders describe as potentially the "largest market that will ever exist."

- Talent War: A significant portion of the capital is allocated to hiring specialized engineers, a talent pool estimated at fewer than 1,000 people globally.

The Shift from Training to Inference

As the generative AI boom matures, capital is shifting from the initial training of massive foundation models to "inference"—the process of actually running those models to generate outputs for end-users. Baseten has positioned itself as the critical infrastructure layer that allows companies to run these models reliably at scale.

According to the company, the demand for inference is outpacing training as high-growth startups like Cursor, Bridge, Open Evidence, and Notion move from experimental phases to mass deployment. Tuhin Srivastava, co-founder of Baseten, argues that while training is capital intensive, the long-term economic opportunity lies in the daily operation of these models.

"The way we think about it is that inference is probably the largest market that will ever exist. The amount of compute that we're going to need to support this is going to be very large. Compute is a capital-intensive business, and that's the business we're going after."

The company operates on a "pay-as-you-go" business model, aligning its revenue directly with the usage growth of its customers. This approach allows Baseten to capture value as its clients—many of whom are the fastest-growing AI startups in the sector—scale their own user bases.

Strategic Alignment with Nvidia

The involvement of Nvidia as an investor highlights the symbiotic relationship between hardware providers and infrastructure management. While Nvidia dominates the chip market, Baseten focuses on the software and orchestration layer that makes those chips accessible and efficient for developers.

Sarah Guo, founder of Conviction and an early investor in Baseten, noted that the industry is seeing "enormous investments" in accelerators and chips, driven by forecasts of trillions of dollars in future compute spending. However, she emphasized that hardware alone does not solve the deployment bottleneck.

"The problem today is just meeting the demand... We have the same vision for what needs to happen to enable all of these AI-native startups. Inference engineering, RL [Reinforcement Learning] as a service, and the optimization of models to make them cheap and efficient to run—these are like the three hottest jobs in AI right now."

Srivastava clarified that Baseten does not view Nvidia as a competitor despite the chipmaker's expanding software footprint. "We've been partners with Nvidia for almost five years now," Srivastava said. "We work really closely with the chips they provide... We think everything is a rising tide."

Market Stickiness and Talent Scarcity

A key differentiator for Baseten is the "stickiness" of inference workloads compared to model training. While training is often a "job-by-job" process where compute is spun up and down, inference requires continuous, low-latency availability. Once a customer integrates their application with Baseten’s infrastructure, switching costs become prohibitively high due to the engineering burden of managing specialized kernels and multi-cloud environments.

Guo highlighted that customers prefer to avoid the "enormous engineering burden" of maintaining their own infrastructure.

"Inference has the stickiest characteristics of any business I've ever seen... Customers come with us, they scale with us. They don't want to take on the burden of managing across ten different clouds or owning their own capacity."

However, scaling this infrastructure presents a significant human capital challenge. The funding will be heavily directed toward recruiting. Srivastava estimated that there are perhaps only 1,000 engineers globally capable of solving the complex systems engineering problems required to build a massive inference cloud. With a $5 billion valuation, Baseten aims to aggressively target this limited talent pool to solidify its position as the default "inference cloud" for the AI industry.

Moving forward, Baseten is also deploying capital through its "Embed" incubator program, providing credits and expertise to early-stage startups to lower the barrier to entry, effectively seeding its next generation of enterprise customers.