Table of Contents

The father of prompt engineering just dropped some pretty wild predictions about where artificial intelligence is heading, and honestly, it's both thrilling and a little mind-bending. Richard Socher, the fourth most cited AI researcher in the world, recently shared insights that could reshape how we think about everything from scientific discovery to our daily lives.

Key Takeaways

- Richard Socher believes he could build digital super intelligence with a couple billion dollars in just 18-24 months

- Open source AI models like DeepSeek are rapidly catching up to closed models, fundamentally changing the competitive landscape

- AI will likely generate a century's worth of biomedical breakthroughs in the next 5-10 years, potentially doubling human lifespan

- We're simultaneously overbuilding data centers while underestimating our overall energy needs for the AI revolution

- The next frontier isn't just better chatbots – it's AI agents that can automate complex knowledge work end-to-end

- Humanoid robots are advancing faster than expected, but specialized robots will likely dominate specific use cases

- The business model for pure foundational AI companies is becoming more like telecommunications – expensive infrastructure with questionable value capture

The Grok 3 Reality Check: When Execution Meets Exponential Tech

Elon Musk's latest AI announcement had everyone buzzing, and for good reason. Building what he claims is the world's largest GPU cluster in just 122 days? That's the kind of execution that makes even seasoned AI experts do a double-take.

- Hardware meets software mastery: While most AI folks focus purely on software, Musk's background with Tesla and SpaceX gives him unique advantages in scaling both hardware and software simultaneously

- The coherence breakthrough: Getting massive GPU clusters to work together efficiently has been considered nearly impossible by many experts, yet Musk's team apparently cracked it

- Resource acceleration: With $6 billion and the right team, you can move incredibly fast in exponential technologies like AI

- Benchmark reality: Despite the hype, early reports suggest Grok 3 performs slightly below top models like Claude and GPT, though still impressively close

What's fascinating here isn't just the speed – it's what this tells us about the broader AI landscape. As Socher puts it, "with exponential technologies like AI, enough resources you can go hard pretty fast." The days when AI development required decades of research are over. Now it's about execution, capital, and vision.

The real innovation might be in cluster management itself. Previous attempts at scaling AI training across massive GPU farms have hit coordination problems that seemed insurmountable. If Musk's team actually solved these infrastructure challenges, that's potentially as valuable as the AI models themselves.

- Abstraction layer evolution: Companies like Any Scale are making it easier to jump from 5 GPUs to 5,000 GPUs with just a few lines of code

- The new AI stack: We're operating at higher levels of abstraction thanks to AI itself, creating feedback loops that accelerate development

- Capital requirements shifting: What once required cutting-edge research now requires cutting-edge resources and execution

Redefining Intelligence: Why AGI Definitions Matter More Than You Think

Here's where things get philosophically interesting. Everyone talks about AGI, but what the hell does that actually mean? Socher's take is refreshingly practical and slightly terrifying.

- The 80% automation threshold: One pragmatic definition suggests AGI arrives when 80% of digitized work can be automated – that's already a massive chunk of global GDP

- Intelligence has dimensions: Visual intelligence, language reasoning, mathematical reasoning, social intelligence – these aren't just different skills, they're potentially unbounded in different directions

- Learning efficiency matters: True intelligence should be able to learn with much less data, like humans do with one or two examples

- Physical manipulation isn't required: A deaf, blind, or paraplegic person can be highly intelligent, so requiring physical capabilities for AGI is unnecessarily limiting

The two-year timeline for digital super intelligence that Socher mentioned isn't just about building bigger models. It's about solving specific research bottlenecks that money and focused effort could crack relatively quickly.

- Research vs. product development: Building products and generating revenue is meaningful, but pure research still has breakthrough potential that's being underexplored

- Sequence modeling universality: Large language models are actually neural sequence models that can be trained on any sequence – actions, proteins, even thoughts potentially

- The financial definition: If we can automate 80% of digitized workflows, we're already talking about transforming massive portions of the economy

What's particularly interesting is how Socher frames intelligence as potentially unbounded in certain dimensions. Knowledge accumulation could theoretically continue until you hit physics-based boundaries – basically the speed of light limiting how fast you can gather information from sensors around the universe. That's a scale of intelligence that makes current AI debates seem quaint.

Science at Lightspeed: The Discovery Revolution

This might be the most exciting part of the entire AI revolution. We're not just talking about better search results or more efficient coding – we're talking about fundamentally accelerating human knowledge.

- The antibiotic resistance breakthrough: Recent AI systems replicated 10 years of antibiotic resistance studies in just 48 hours, showing how dramatically AI can compress research timelines

- Protein language mastery: Socher's team created proteins that were 40% different from naturally occurring ones, compared to Nobel Prize-winning directed evolution work that achieved only 3% differences

- Century-scale acceleration: Anthropic's CEO Dario Amodei predicts a century's worth of biomedical research in the next 5-10 years

- Universal enthusiasm: Unlike other AI applications that worry people about job displacement, everyone wants more scientific breakthroughs and medical advances

The protein work particularly stands out because it shows AI isn't just finding patterns in existing data – it's learning the fundamental "grammar" of biological systems. When you can synthesize proteins that fold properly and have predicted properties while being 40% different from anything in nature, you're essentially speaking biology's native language.

- Simulation enablement: Anything you can simulate, AI can potentially solve, whether it's chess, Go, or molecular interactions

- Wet lab integration: Companies are building robotic laboratories that can run AI-designed experiments hundreds of times faster than humans

- Feedback loop acceleration: The combination of theoretical AI models and physical experimentation creates incredibly tight iteration cycles

- Materials science potential: Imagine prompting an AI to "design a room-temperature superconductor using these elements at this cost" – that's not science fiction anymore

The longevity implications alone are staggering. If we can really compress decades of biomedical research into years, we're talking about potentially solving aging, cancer, and viral infections within this decade. Larry Ellison's recent announcement about personalized mRNA vaccines against individual cancers is just the beginning.

The Energy Paradox: Overbuilding and Underestimating Simultaneously

Here's a counterintuitive insight that caught my attention: we might be overbuilding data centers while simultaneously underestimating our energy needs. How's that possible?

- The DeepSeek lesson: Massive breakthroughs are happening with much smaller computational budgets, suggesting training efficiency is improving faster than expected

- Demonetization curves: The cost per AI transaction is dropping so rapidly that previous capacity planning becomes obsolete before construction finishes

- Jevons Paradox in action: As AI becomes more efficient, we'll use it everywhere – personal assistants, tutors, health teams, creating massive demand

- Water shortage reality: Most resource shortages are actually energy problems in disguise – with enough energy, you can desalinate ocean water and solve water scarcity

The Microsoft data center lease cancellations mentioned in recent news make more sense in this context. Companies are building for computational needs based on six-month-old projections, but AI efficiency is improving so fast that those projections become invalid.

- Energy vs. data centers: We'll definitely need more energy overall, but perhaps less concentrated in traditional data center configurations

- Infrastructure timing: The challenge is matching infrastructure buildout timelines with the actual evolution of AI computational needs

- Geographical distribution: As AI becomes more efficient, we might see more distributed computing rather than massive centralized clusters

Socher's broader point about energy solving many human problems is fascinating. Desert regions could become habitable with enough energy for air conditioning and water desalination. Food production could scale dramatically. Climate challenges could become engineering challenges rather than existential threats.

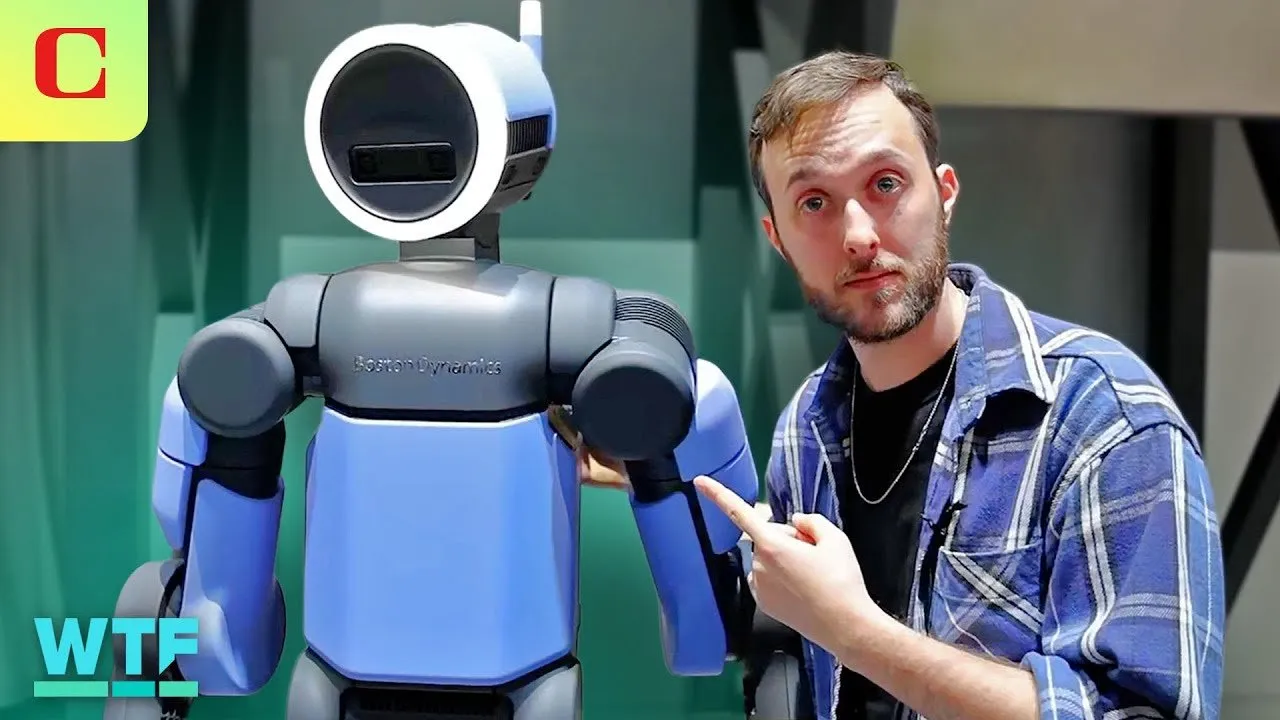

Robots in Reality: Beyond the Uncanny Valley

The humanoid robot discussion reveals some interesting tensions in how we think about AI in the physical world. Everyone's building human-shaped robots, but is that actually the best approach?

- Form follows function debate: Specialized robots like dishwashers and Roombas work because they're designed for specific tasks, not general human mimicry

- The collaboration argument: Instead of one robot with four arms, why not two robots working together? Or better yet, one robot with seven arms?

- Comfort factor: Humans might prefer humanoid robots in their homes, even if wheel-based robots with multiple arms would be more efficient

- Data collection phase: Early humanoid robots will likely be remote-controlled by humans to collect training data, raising privacy concerns about having operators in other countries see inside your home

The recent demos from companies like Figure, 1X, and others show remarkable progress in just the past year. Five years ago, robots could barely walk without falling over. Now they're collaborating on complex tasks and moving with increasingly natural motions.

- Environment standardization: Self-driving cars work partly because roads are standardized; houses have much more variation, making household robotics harder

- Use case specificity: The companies that nail one important use case thoroughly will have massive advantages over generalist approaches

- Capital intensity: Robotics requires enormous upfront investment with uncertain payoff timelines

- Fast follower risk: Can companies reverse-engineer successful robotics solutions and leapfrog the expensive research phase?

The T-1000 reference Socher made is intriguing – most companies are building Terminator-style mechanical robots, but what about liquid metal or other non-traditional approaches? The assumption that robots need to look human might be limiting our imagination.

The Agent Revolution: When AI Takes Action

This is where things get really practical. We're moving beyond AI that just answers questions to AI that actually does things for you. Socher's platform already has over 50,000 custom agents built by users, showing real demand for task automation.

- Marketing automation example: An agent that takes a PDF of new product features and automatically creates email campaigns, LinkedIn messages, and competitive analysis

- Research acceleration: Journalists using agents to research topics across 50 different sources and synthesize findings, reducing multi-day tasks to 2-3 hours

- Investment analysis: VC firms using agents to analyze data rooms, calculate metrics like net dollar retention and CAC/LTV ratios automatically

- Knowledge work transformation: Any repeatable knowledge work process can potentially be automated through careful prompting and workflow design

The limitation right now isn't technical capability – it's trust and personalization. Booking a flight sounds simple until you realize all the preferences and constraints that vary by person and situation. A graduate student might wait 10 hours for a layover to save $200, while a successful executive pays thousands extra for direct flights.

- Privacy barriers: People aren't ready to let AI systems record phone calls, read emails, and watch everything they do, even though that would enable much better personal assistants

- Microsoft Recall backlash: When Microsoft tried to screenshot everything users do, the privacy concerns were overwhelming

- Trust building requirement: Companies need massive user trust before implementing comprehensive life automation

- Internet monetization disruption: AI agents that ignore ads could fundamentally break how many websites make money

The Jarvis-like personal assistant everyone wants is technically possible but socially and economically complex. We need new frameworks for privacy, new business models for content creators, and new social norms around AI assistance.

What This Means for Everyone

Looking at all these developments together, we're clearly at an inflection point. The combination of rapidly improving AI capabilities, decreasing costs, and increasing adoption is creating feedback loops that are hard to predict but impossible to ignore.

The open source versus closed source debate is particularly interesting because it mirrors what happened with web servers – Microsoft dominated initially, but open source solutions eventually captured 99.9% of the market. If that pattern holds, the companies building moats around AI models might find themselves becoming expensive infrastructure providers rather than value capturers.

- Future-proofing strategy: Rather than betting on specific AI models, smart organizations are building relationships with platforms that can adapt as new models emerge

- Citation and trust: The ability to trace AI answers back to specific sources becomes crucial as AI gets integrated into professional workflows

- Federated intelligence: Using multiple AI models and routing queries based on task type might be more effective than relying on any single model

What excites me most is the scientific acceleration potential. If we really can compress decades of research into years, we're talking about solving problems that have plagued humanity for centuries. Cancer, aging, climate change, resource scarcity – these could all become engineering challenges rather than insurmountable obstacles.

The timeline Socher suggests for digital super intelligence – 18 to 24 months with sufficient funding – isn't just about building bigger models. It's about solving specific research bottlenecks that haven't received enough focused attention. Whether that timeline proves accurate or not, the direction is clear: we're moving from the age of scarce intelligence to the age of abundant intelligence, and that changes everything.